Study with the several resources on Docsity

Earn points by helping other students or get them with a premium plan

Prepare for your exams

Study with the several resources on Docsity

Earn points to download

Earn points by helping other students or get them with a premium plan

Community

Ask the community for help and clear up your study doubts

Discover the best universities in your country according to Docsity users

Free resources

Download our free guides on studying techniques, anxiety management strategies, and thesis advice from Docsity tutors

The effects of humanized chatbots on customer satisfaction, particularly for angry customers. The author, Rhonda Hadi, a professor of marketing at the University of Oxford, discusses how humanizing chatbots can lead to increased customer expectations and negative emotional reactions when the bot fails to meet those expectations. research findings from studies on customer interactions with chatbots and suggests strategies for companies to manage customer anger and expectations in chatbot interactions.

Typology: Assignments

1 / 6

This page cannot be seen from the preview

Don't miss anything!

30

31

advancements in AI are continuously transforming the way companies operate, including how they interact with their customers. One clear illustration of this is the proliferation of customer service chatbots in the marketplace. In typical applications, these automated conversational agents exist on a company’s website or social media page, and either provide customers with information or help handle customer com- plaints. Remarkably, chatbots are expected to power 85% of all customer service interactions by 2020, with some analysts predicting the global chatbot market will exceed $1.34 bil- lion by 2024. The cost-saving benefits are intuitive, but do chatbots improve customer service outcomes? While some industry voices believe that chatbots will improve customer service due to their speed and data synthesizing abilities, other experts caution that chatbots will worsen customer service and lead customers to revolt. Through a series of studies, we shed light on how customers react to chatbots. Our research finds support for both optimists and skeptics, contingent on the situation and specific characteristics of the chatbot.

gy designers often make deliberate attempts to humanize AI, for example, by imbuing voice-activated devices with conversational human voices. Industry practice shows these efforts also apply to customer service chatbots, many of which are given human-like avatars and names (see Box 1). Humanization, of course, is not a new marketing strategy.

When Humanizing Customer

Service Chatbots Might Backfire

Rhonda Hadi

K E Y WO RDS Chatbots, Customer Service, Angry Customers, Avatars, AI

T HE AU T H O R Rhonda Hadi Professor of Marketing, Saïd Business School, University of Oxford, United Kingdom Rhonda.Hadi@sbs.ox.ac.uk

— doi 10.2478 / nimmir-2019-0013 Humanizing Chatbots Vol. 11, No. 2, 2019 NIM Marketing Intelligence Review

33

Product designers and brand managers have long encouraged consumers to view their products and brands as human-like, either through a product’s visual features or through brand mascots, like Mr. Clean or the Michelin Man. This strategy has generally been linked to improved commercial success: brands with human characteristics support more personal consumer-brand relationships and have been shown to boost overall product evaluations in several categories, including automobiles, cell phones, and beverages. Further, in the realm of technology, human-like interfaces have been shown to increase consumer trust. However, there is also evidence that in particular settings, these humanization attempts can elicit negative emotional reactions from customers, especially if the product does not deliver as expected.

a human-like way, customers tend to assume it encompasses a certain level of agency. That is, they expect the chatbot is capable of planning, acting and reacting in similar man- ner to a human being. These heightened expectations not only increase customers’ hopes that the chatbot is capable of doing something for them, but they also increase custom- ers’ beliefs that the chatbot should be held accountable for its actions and deserves punishment in case of wrongdoing. Of course, chatbots – no matter how human they may seem

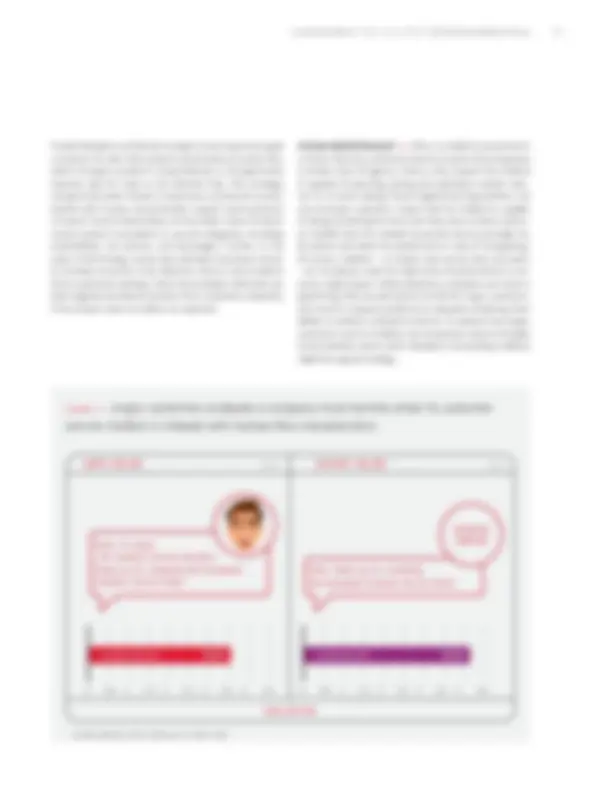

FIGURE 2 Angry customers evaluate a company more harshly when its customer service chatbot is imbued with human-like characteristics

Hello. Thank you for contacting the Automated Customer Service Center.

0 0,5 1 1,5 2 2,5 3 3,5 4 4,

S TANDARD B O T 4,

0 0,5 1 1,5 2 2,5 3 3,5 4 4,

HUM ANI ZED B O T 3,

Hello. I'm Jamie CENTER ...the Customer Service Assistant. Thank you for contacting the Automated Customer Service Center.

Overall evaluation of the company on a 7-point scale

Humanizing Chatbots Vol. 11, No. 2, 2019 NIM Marketing Intelligence Review

34

colleagues from the University of Oxford: Felipe Thomaz, Cammy Crolic, and Andrew Stephen. In our first study, we analyzed over 1.5 million text entries of customers interact- ing with a customer service chatbot from a global telecom company. Through natural language processing analysis, we found that humanization of the chatbot improved con- sumer satisfaction, except if customers were angry. For cus- tomers who entered the chat in an angry emotional state, humanization had a drastic negative effect on ultimate satisfaction. In a series of follow-up experiments, we used simulated chatbot interactions and manipulated both chat- bot characteristics and customer anger. These experiments confirmed our initial analysis, as angry customers reported lower overall satisfaction when the chatbot was humanized than when it was not. Furthermore, results showed that the negative influence of humanized chatbots under angry conditions extends to harm customers’ repurchase inten- tions and evaluations of the company itself (see Figure 2).

At first glance, it may seem like it is always a good idea to humanize customer service chatbots. However, our research suggests that the consequences of humanizing chatbots are more nuanced and that the outcomes depend on both customer characteristics and the specific service context. We believe chatbot humanization may act as a double-edged sword: it enhances customer satisfaction for non-angry consumers but exacerbates negative responses from angry

consumers. Therefore, companies should carefully consider whether or when to humanize their customer service chat- bots. Based on our findings we suggest the following guidance which might be helpful in designing a company´s (automated) customer service in an efficient, yet customer-friendly way:

determining customer reactions to humanized chatbots. Therefore, it is advisable to predetermine whether cus- tomers are angry as a first step. This could be done using keywords or real-time natural language processing. Angry customers could then be transferred to a non-humanized chatbot while others could be introduced to a humanized version. Another option might be to promptly divert angry customers to a human agent who can possibly be more empathetic and has more agency and flexibility to actually solve a problem to the customer’s satisfaction.

cally to complain, one can assume at least a moderate level of anger. Therefore, a non-humanized chatbot should be implemented in these settings to avoid potential nega- tive effects on company reputation or purchase intentions when the bot is unable to offer adequate solutions. The use of humanized bots could be restricted to more neutral or promotion-oriented services like searches for product information or other assistance.

NIM Marketing Intelligence Review Vol. 11, No. 2, 2019 Humanizing Chatbots

Chatbot humanization may act as a double-edged

sword: it enhances customer satisfaction

for non-angry consumers but exacerbates negative

responses from angry consumers.